AdaptSim: Task-Driven Simulation Adaptation for Sim-to-Real Transfer

Allen Z. Ren Hongkai Dai Benjamin Burchfiel Anirudha Majumdar

Princeton University, Toyota Research Institute Conference on Robot Learning (CoRL), 2023

Paper | Video | Code | Bibtex

|

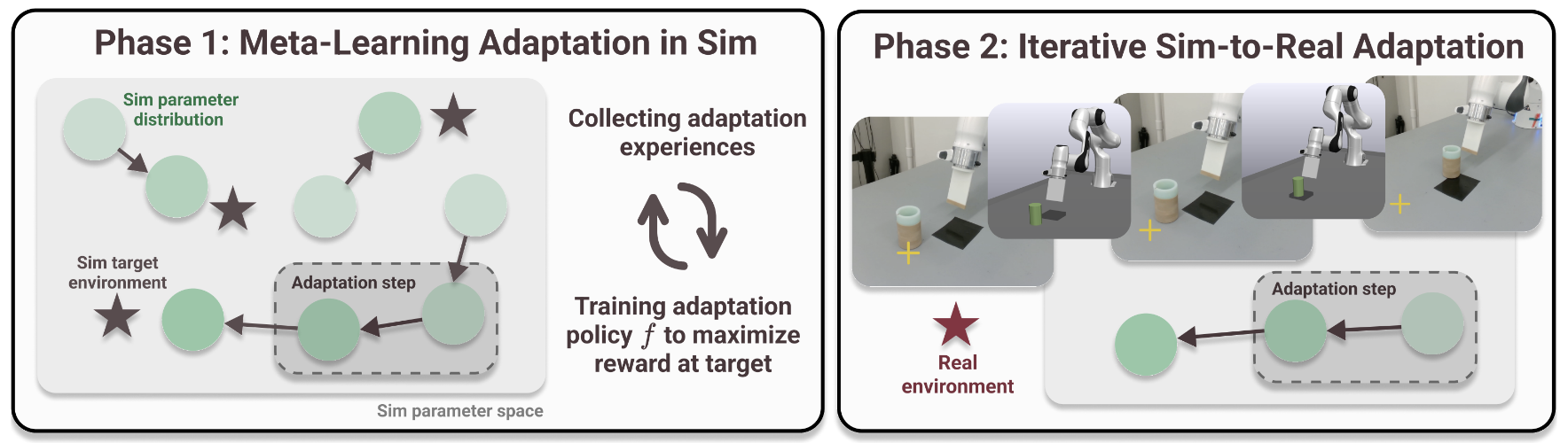

We propose AdaptSim — a task-driven sim-to-real adaptation framework that optimizes task performance in target (real) environments by iteratively updating simulation parameters for policy training based on real rollouts. |

Method Overview

How should we specify simulation parameters to maximize policy performance in the real world while minimizing the amount of real-world data we require? This is an extremely challenging problem in contact-rich manipulation tasks, where there is a substantial amount of “irreducible” sim-to-real gap. The exact object geometry is difficult to specify, deformations are not yet maturely implemented in simulators, and contact models such as point contact poorly approximate the complex real-world contact behavior. In this case, real environments can be out-of-domain from simulation, and techniques such as adaptive domain randomization that performs Sys-ID of simulation parameters based on real rollouts might fail to train a useful policy for the real world.

|

|

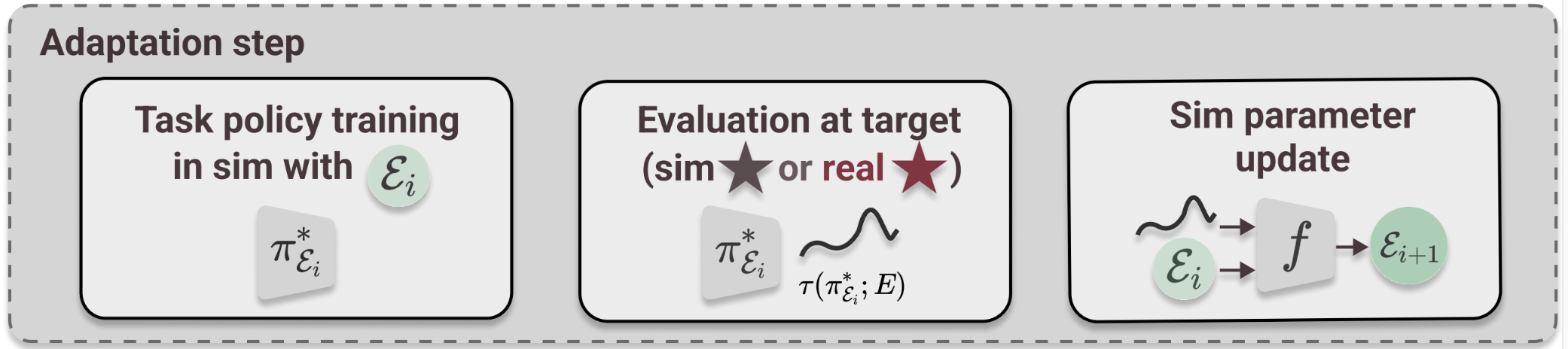

The simulation parameters are updated as follows: the task policy is trained with the current simulation parameter distribution, then evaluated in the target environment to obtain the resulting object trajectory, and finally the adaptation policy takes the current distribution and the trajectory and outputs the new simulation parameter distribution. |

|

Because the adaptation policy is trained using task reward, the simulation parameters are not required to match the dynamics in the target environment, as long as the trained task policy performs well there. This allows AdaptSim to adapt to real environments where the dynamics are not well captured by the simulation. |

Task Setup

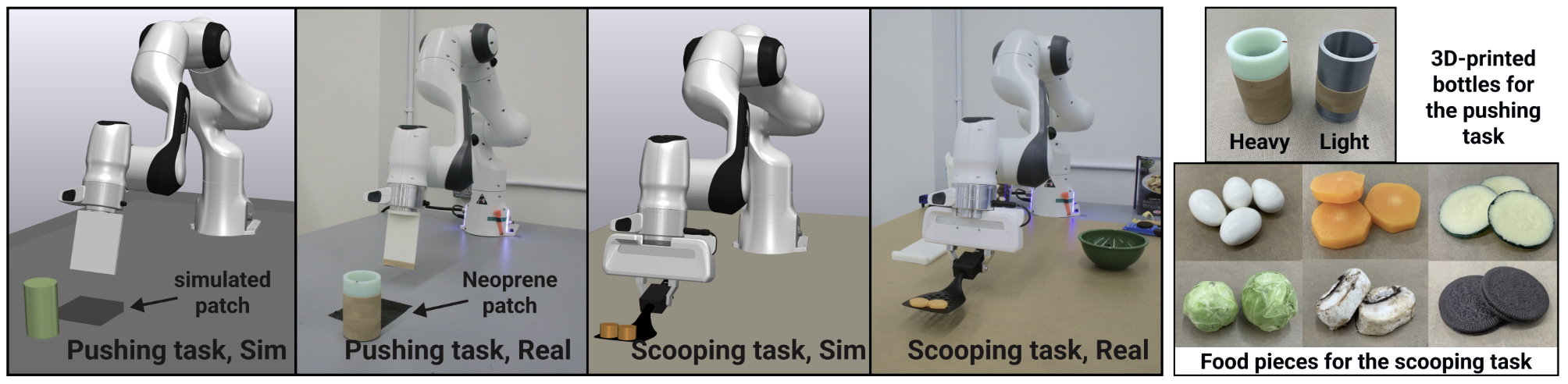

We consider two real-world manipulation tasks. (1) Dynamic table-top pushing of a bottle: we often find the bottle rotates in the real-world, which is not modeled in simulation; we also adhere a small piece of high-friction Neoprene rubber to the table, which decelerates the bottle and further complicate the task dynamics. (2) Dynamic scooping of food pieces with a spatula: it is a challenging task that requires intricate planning of the scooping trajectory — we notice humans cannot complete the task consistently without a few trials to practice. |

|

Experiment Results

|

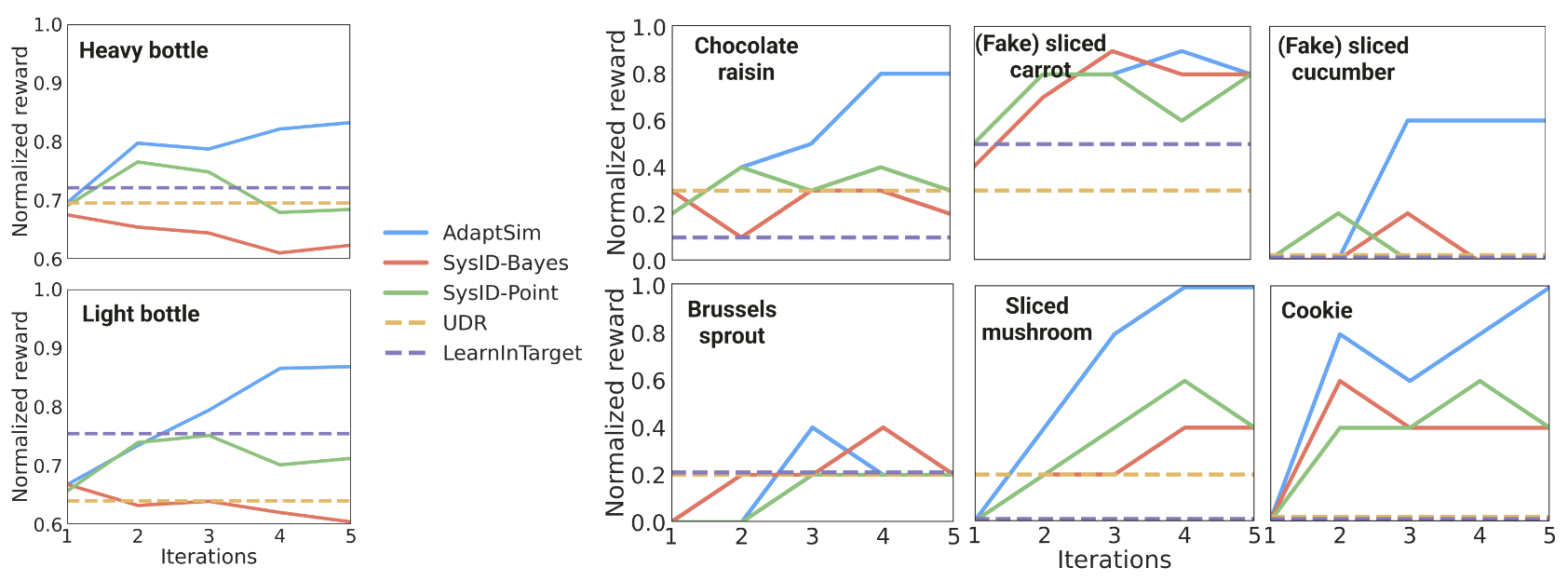

Figure below shows the average reward achieved at each adaptation iteration in the pushing and scooping tasks. AdaptSim achieves the best performance and real-rollout efficiency in almost all the environments. The difference is larger in the scooping task, where some baselines can fail to scoop up the pieces through all iterations. |

|

|

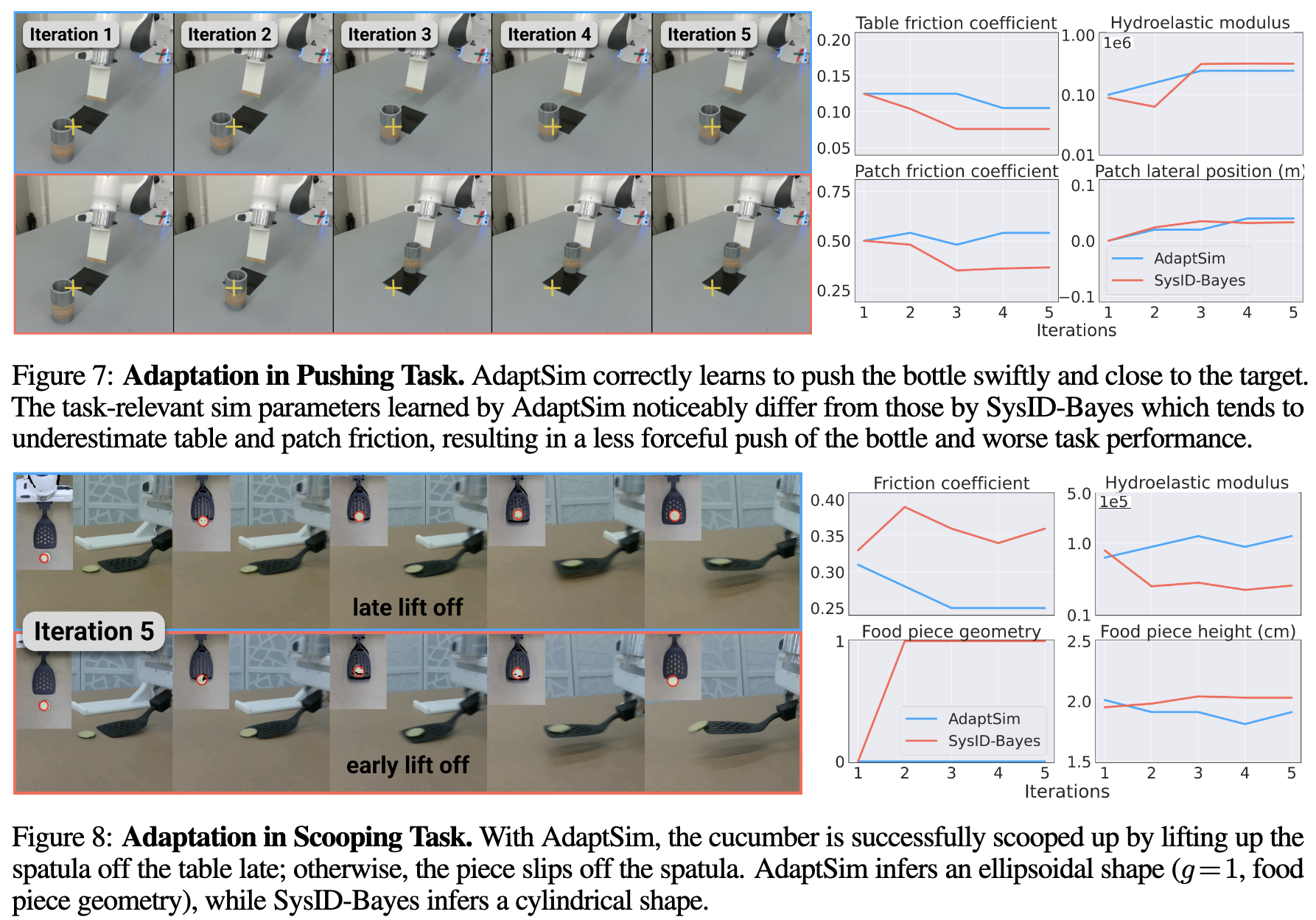

In the two environments shown below, SysID-Bayes under-performs AdaptSim and there are visible differences between sim parameter distributions found by the two approaches. In the pushing task, SysID-Bayes infers table and patch friction coefficients that are too low, and the trained task policy pushes the bottle with little speed. In the scooping task, interestingly, AdaptSim infers an ellipsoidal shape for the sliced cucumber despite it resembling a very thin cylinder, and the task policy achieves 60% success rate. Sys-ID infers a cylindrical shape but the task policy fails completely. |

|

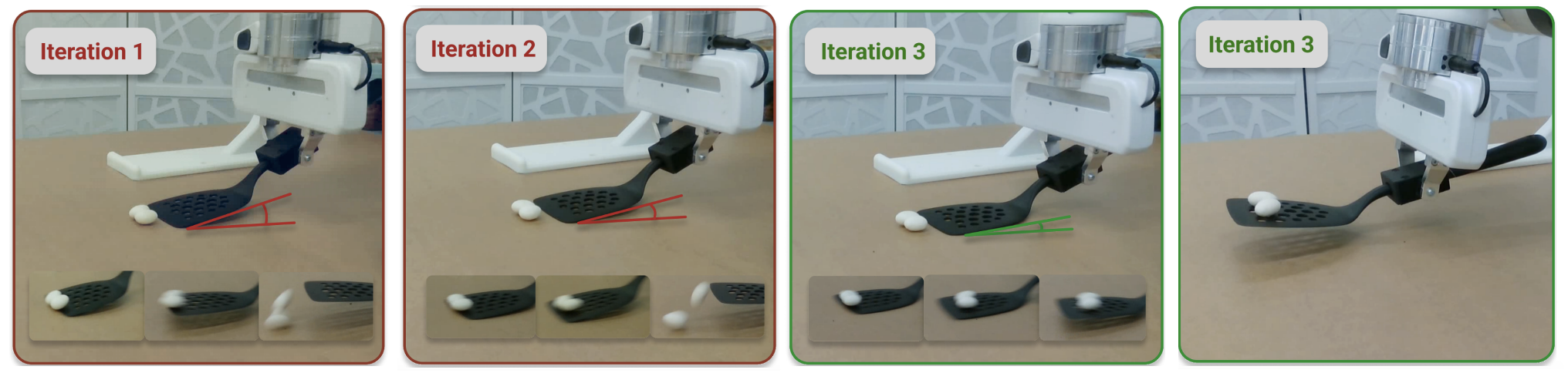

Scooping up chocolate raisins over iterations - task policy learns to scoop with a shallower angle. |

Scooping up (fake) sliced cucumber over iterations - task policy learns to start scooping closer to the piece. |

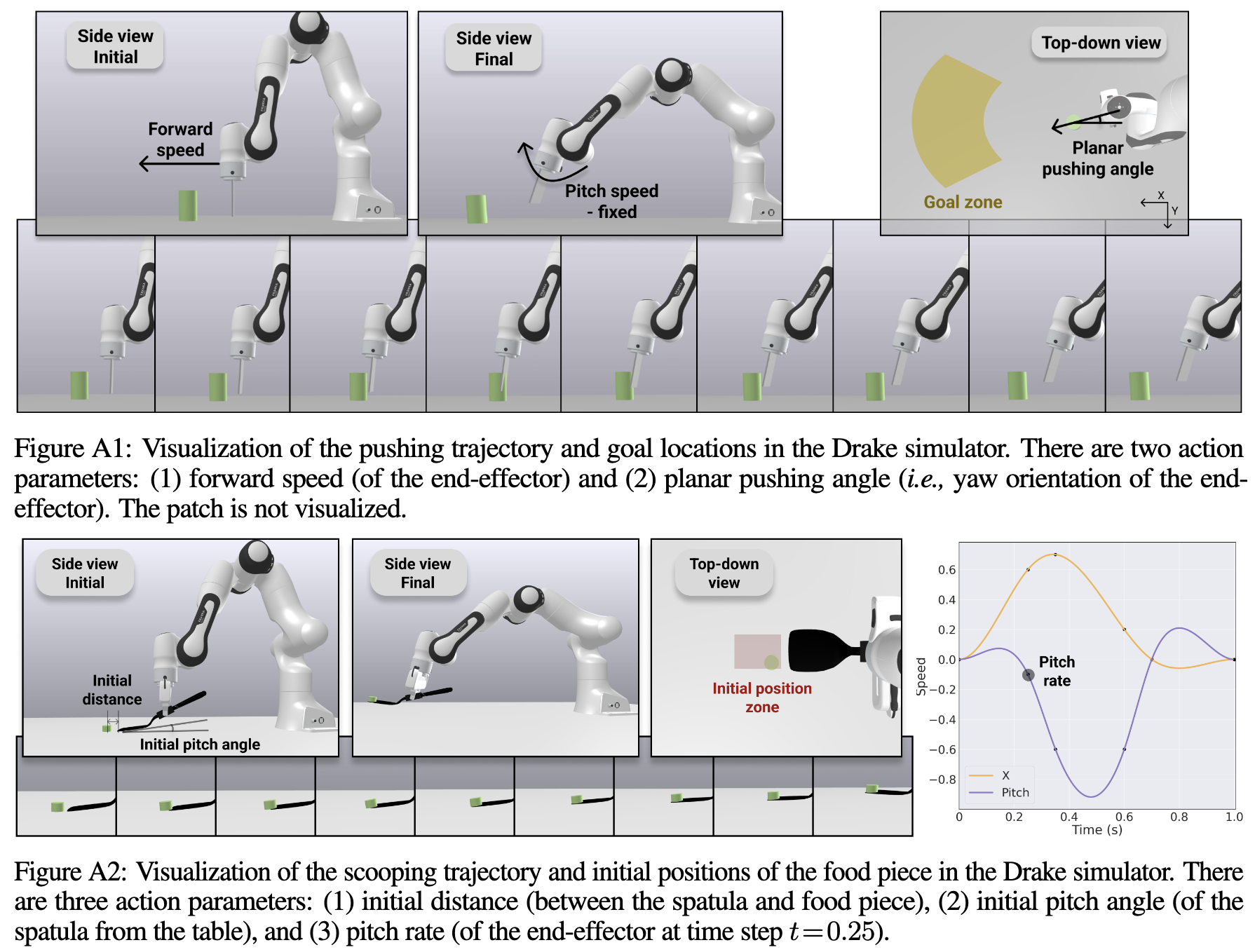

Drake Simulation

To our knowledge, we are the first work that runs policy training with large-scale simulation with Drake, a physically-realistic simulator suited for contact-rich manipulation tasks. We find that the hydroelastic contact model in Drake is critical to simulating the scooping task (with the traditional point contact model, we were never able to simulate dynamic scooping in simulation). We have made the simulation environments available here. |

|

|

|

Acknowledgements

The authors were partially supported by the Toyota Research Institute (TRI), the NSF CAREER Award [\#2044149], and the Office of Naval Research [N00014-23-1-2148, N00014-21-1-2803]. This article solely reflects the opinions and conclusions of its authors and NSF, ONR, TRI or any other Toyota entity. The authors would like to thank the Dynamics and Simulation team and Dexterous Manipulation team at TRI Robotics for technical support and guidance. We also thank Yimin Lin for helpful discussions. |